Latest news

Recently, Professor Chen Zhibo's research group of the School of Information Science and Technology has made progress in high-resolution remote sensing image change detection. The research results entitled "A CNN-Transformer Network Combining CBAM for Change Detection in High-Resolution Remote Sensing Images" was published in Remote Sensing (Q2, IF=5.349), a Top journal in the field of remote sensing.

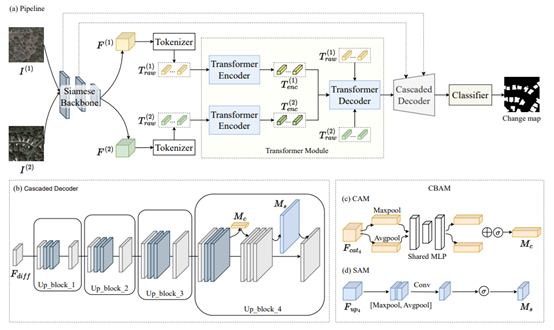

Current deep learning-based change detection approaches mostly produce convincing results by introducing attention mechanisms to traditional convolutional networks. However, given the limitation of the receptive field, convolution-based methods fall short of fully modelling global context and capturing long-range dependencies, thus insufficient in discriminating pseudo changes. Transformers have an efficient global spatio-temporal modelling capability, which is beneficial for the feature representation of changes of interest. However, the lack of detailed information may cause the transformer to locate the boundaries of changed regions inaccurately. Therefore, in this article, a hybrid CNN-transformer architecture named CTCANet, combining the strengths of convolutional networks, transformer, and attention mechanisms, is proposed for high-resolution bi-temporal remote sensing image change detection. To obtain high-level feature representations that reveal changes of interest, CTCANet utilizes tokenizer to embed the features of each image extracted by convolutional network into a sequence of tokens, and the transformer module to model global spatio-temporal context in token space. The optimal bi-temporal information fusion approach is explored here. Subsequently, the reconstructed features carrying deep abstract information are fed to the cascaded decoder to aggregate with features containing shallow fine-grained information, through skip connections. Such an aggregation empowers our model to maintain the completeness of changes and accurately locate small targets. Moreover, the integration of the convolutional block attention module enables the smoothing of semantic gaps between heterogeneous features and the accentuation of relevant changes in both the channel and spatial domains, resulting in more impressive outcomes. The performance of the proposed CTCANet surpasses that of recent certain state-of-the-art methods, as evidenced by experimental results on two publicly accessible datasets, LEVIR-CD and SYSU-CD.

This article proposes a novel CNN-transformer network for high-resolution remote sensing image change detection. Initially, the proposed model utilizes the Siamese backbone to extract hierarchical features from input images. Following this, our tokenizer converts the deepest features of the two branches into semantic tokens, which are subsequently propagated into the transformer module to enable global spatio-temporal context modelling. Here, we design experiments to explore the most appropriate bi-temporal information fusion strategy. After reshaping the context-rich semantic tokens into pixel-level features, the refined high-level features are incorporated with the low-level features from individual raw images using skip connections to reduce the loss of details and better locate the changed regions. At the same time, CBAM is integrated into the last upsampling block of the cascaded decoder to smooth semantic gaps between heterogeneous features. Furthermore, it promotes change detection by highlighting the change of interest and suppressing irrelevant information across the channel and spatial domains. The effectiveness of CTCANet is confirmed by comparing it with some advanced approaches on two open accessible datasets, LEVIR-CD and SYSU-CD. The findings suggest that the presented approach holds greater potential compared to other methods.

The first author of the paper is graduate student Yin Mengmeng, and the corresponding author is Professor Chen Zhibo. This research was funded by the National Forestry and Grassland Administration of China, grant number 2021133108. The funding program is known as “Forestry, Grass Technology Promotion APP Information Service”.

论文链接:https://www.mdpi.com/2072-4292/15/9/2406